Sounds good, but why do I need this?

Calculating cost at scale is a critical task before running a Production application anywhere. One thing is to pay a few dollars for a development environment - and a different one is to pay for Production cloud infrastructure that your customers and your business will depend on. If you don’t know well in advance how much money you’ll pay for your live applications, you could put your business at risk.

You can calculate AWS cost using manual tools, such as the AWS Simple Monthly Calculator, but it’s time consuming and there are many system metrics you need to know if you want to make accurate price calculations. Or you can run some tests and then wait about one day to analyze your AWS Cost and Usage Reports, or use AWS Cost Explorer. Those are all useful tools, but you’ll still need an automated way to estimate cost and how it correlates to other metrics in your application - right away.

That’s why some time ago I built the AWS Near Real-time Price Calculator tool. An easy, automated way to estimate AWS cost in near real time, based on live CloudWatch metrics.

Ok, so what’s new?

The initial version of this tool only included support for EC2. Then I added other services such as ELB, EBS and RDS. Now I’m happy to include other essential services that are used in many Serverless applications:

- Lambda

- Kinesis

- Dynamo DB.

Remind me… how does it work?

Installation instructions

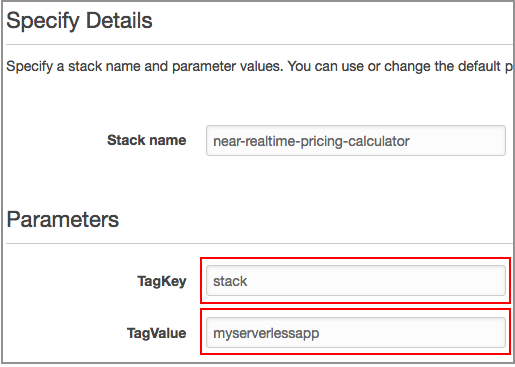

I created a CloudFormation template, which simplifies the installation process down to a few clicks.

The first step is to tag all the AWS resources you want to estimate cost for. For

example, make sure your Lambda functions and Dynamo tables have a common tag. Let’s say, stack=myserverlessapp

The next step is to launch the CloudFormation stack:

Select your AWS region. Click on “Next” and you’ll see the following screen, where you specify the tag you want to estimate AWS cost for.

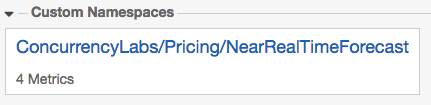

Continue with the CloudFormation stack creation and wait 5-10 minutes. Then go to the CloudWatch console and find the custom metrics generated by this tool.

And that’s it! You can now view cost estimation metrics by dimensions ServiceName and Tag. You can add them to a CloudWatch Dashboard and monitor them alongside other system metrics!

Architecture

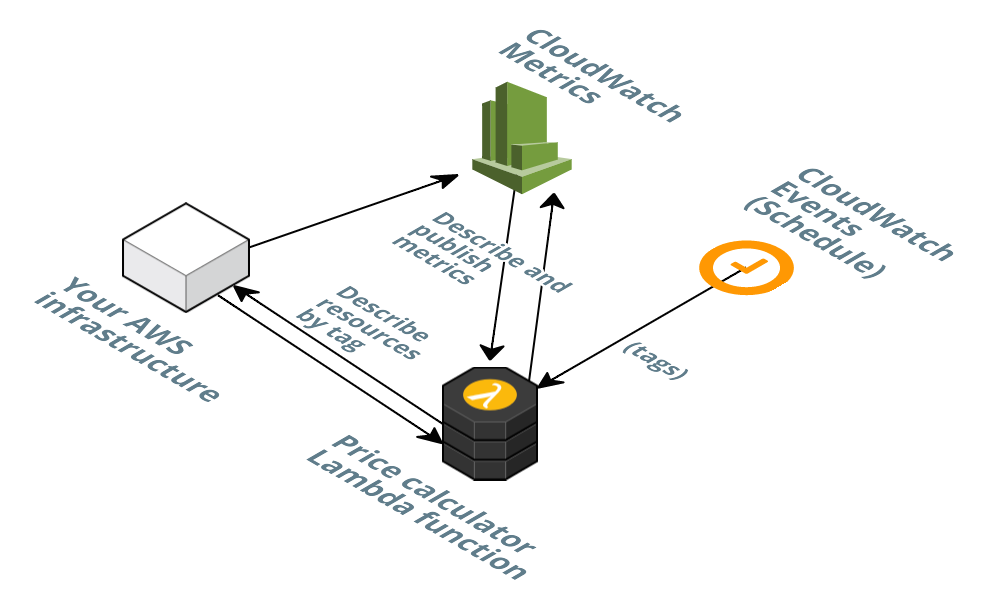

During the CloudFormation stack creation, you specified the tag to estimate cost for. The CloudFormation template creates a CloudWatch Scheduled event that triggers the Lambda function once every 5 minutes and sends the tag key/value pair as an input parameter to the Lambda function..

On each invocation, the function finds AWS resources with the given tag and looks for relevant CloudWatch metrics and configuration values. Based on that, it calculates price and it publishes results as a CloudWatch metric.

This is the architecture:

If you want to calculate cost for more tags, all you have to do is configure more CloudWatch Scheduled Events that send a tag key/value pair to the Lambda function.

For more details, here is the GitHub repo:

Let’s see it in action!

For this example I load-tested an application consisting of a Lambda function that processes data from a Kinesis stream and writes results to a Dynamo DB table.

I provisioned a total of 7 shards in my Kinesis stream plus a total of 210 Write Capacity Units and 111 Read Capacity Units in Dynamo DB. Lambda executions vary according to the amount of records ingested in the Kinesis stream.

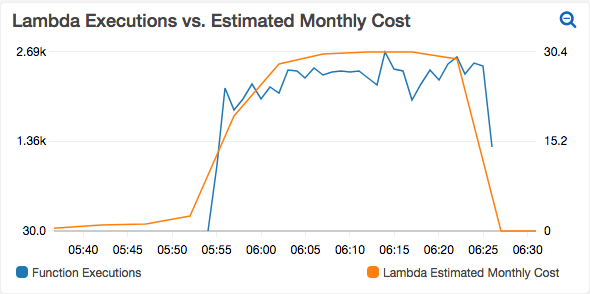

Then I induced some load in the system. You can see the estimated Lambda price increasing with executions. I can now update variables that will affect the number of Lambda executions (e.g. batch size from Kinesis) and I will see the immediate impact on the monthly estimated cost.

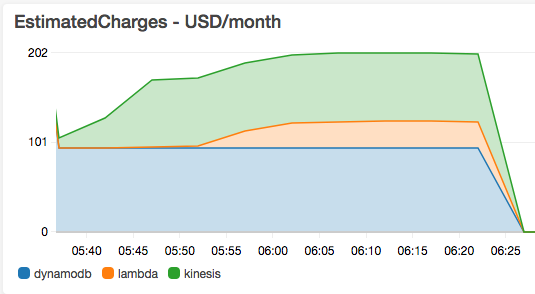

And here I can see the total monthly estimated cost, stacked by service:

Now I have metrics that I can place in a CloudWatch Dashboard and visualize next to system metrics such as Lambda Executions, Lambda Duration, Kinesis IncomingBatches, Kinesis IncomingBytes, Dynamo DB ProvisionedWriteCapaciyUnits, etc. I can update my Dynamo DB table’s provisioned capacity and 5 minutes later see the impact on estimated cost. The same if I update the number of shards in my Kinesis stream or change the memory allocation in my Lambda functions.

This has saved me a lot of time and it gives me a good idea on how much an application will cost in Production (and avoid bad AWS billing surprises!)

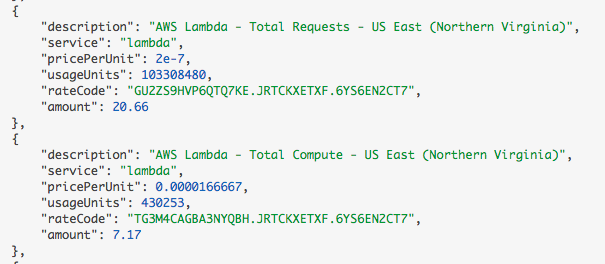

If you want to see more pricing details, just go to CloudWatch Logs and view the execution logs for the price calculator Lambda function. For each execution you will see a JSON log message with more information, such as price dimensions and usage amount considered in the price calculation. For example:

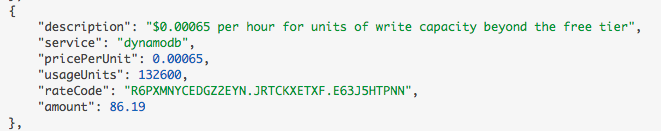

… or for Dynamo DB calculations:

A word of caution

Keep in mind this is an unofficial tool, so use at your own risk. The only source of truth for AWS cost is your AWS bill, followed by AWS Cost and Usage reports. This tool is a very useful way to estimate monthly AWS cost, for testing purposes and in some Production environments. That being said, I use it very often in my project work and it has made my life much easier. Also, this tool relies on CloudWatch metrics to gather price dimensions and estimate cost. Even though it covers many important price factors, there are some price dimensions that cannot be measured 100% accurately by using CloudWatch metrics. For example:

- Lambda data transfer. There is no CloudWatch metric that measures data transfer in Lambda. Even if there was one, there is no easy way to know in real time if data is being transferred out to the internet, intraregion or interregion, which determines the actual cost.

- Kinesis PUT Payload units. There is no CloudWatch metric that measures the number of PUT Payload Units entering a Kinesis stream. There is a way to calculate an approximation based on the number of incoming records and the average incoming data to the stream. However, it’s just an approximation.

- Dynamo DB data transfer. There is no CloudWatch metric that measures data transfer out of a Dynamo DB table. Also, not all data transfers are billed the same and there is no way to differentiate in real time the type of data transfer that is taking place out of your tables.

- Dynamo DB storage. There is no CloudWatch metric that tells you the size of your table or index, therefore this tool cannot calculate cost related to Dynamo DB storage.

Keep in mind this is a Lambda function, which has a maximum timeout of 300 seconds. It is not designed to iterate over a very large number of AWS resources. Just make sure tags cover a number of resources that don’t make the Lambda function go beyond the 300-second timeout.

To summarize

The near real-time AWS cost calculator can save you a lot of time and it’s a very useful tool for estimating AWS cost at scale. Please remember it has limitations.

Recommended use cases for this tool:

- In test environments, when doing load tests at the expected volume in Production. A common mistake when executing load tests is to focus ONLY on response times and throughput. This tool will help you quickly correlate performance and system metrics with AWS cost.

- In a Production environment, if you want to quickly detect cost anomalies for a particular application or stack.

And that’s it for now. I hope you found this useful!

Do you need help with cost optimization for your AWS serverless applications?

Are you building a new serverless application or migrating to a serverless architecture? Do you already have applications running on serverless components?

I can certainly help you save money and make sure they will support your business growth. Just click on the button below to schedule a consultation, or use the contact form.