Do you want to migrate to AWS (or are building a new web app), but are worried about potential code changes or vendor lock-in? Then I have good news, AWS Elastic File System (EFS) might be what you’re looking for! With EFS you can build or run your web applications in AWS with minimal or zero code changes and at the same time enjoy all the advantages of using the cloud, such as elasticity, high availability and pay-as-you-go.

I’ve been able to make EFS work in many cases, but it’s a tricky service. It’s very easy to run into performance traps and sub-optimal situations. In this article, I show you how to avoid common issues with EFS, based on my own project experience migrating and launching web applications using this service.

Just keep in mind, even though my findings might be applicable to many types of applications using EFS, this article is about applications that serve web content.

First, what is AWS Elastic File System (EFS)?

EFS is a shared file system that runs in the cloud. You can have pretty much any number of EC2 instances connecting to your Elastic File System and read or write files stored in it.

From your applications’ point of view, it works in a similar way as any other Network Attached Storage (NAS),

since it uses a standard protocol such as NFS.

You basically choose a local path in your EC2 instance (i.e. /mylocal/path/) and mount it to the EFS. Once you do that,

your application doesn’t really care that it’s accessing files stored in the EFS. It only cares

about the path, which looks exactly like accessing files locally.

This means in most cases you don’t have to make any application changes in order to get started with EFS.

Let’s start with the advantages of using EFS

- Elasticity. EFS can grow as large as you need it to, without you configuring anything. Gone are the days where your applications fail because they ran out of disk space. If you delete files, you don’t have to worry about reducing the EFS size either. It simply adapts to your storage needs.

- Cost Savings. You only pay for the amount of storage you use, so you won’t overpay for over-provisioned storage.

- Minimal to zero code changes. You have a local path where you store your web application’s code or user files. All you need to do is mount it to the EFS and that’s it, no code updates required.

- Easiest path for migrating to AWS. Since minimal to no code updates are required, you can migrate web applications to AWS very quickly using EFS. No need to call any AWS APIs inside your code to get started.

- Minimal to zero vendor lock-in. Since it uses a standard protocol like NFS, you don’t need to call any AWS-specific APIs or make any changes that are specific to AWS. If one day you want to migrate out of AWS, you can do it very easily.

- You can use an essential service such as Auto Scaling, very quickly. Since you can use EFS to store files that you would otherwise store locally, you can set up stateless EC2 servers very quickly. This is an essential step in order to take advantage of a key cloud service such as Auto Scaling. Before EFS, modifying applications to make them work with stateless servers was one of the main barriers for using Auto Scaling. You can still use S3 or other AWS external data storage solutions (which I recommend as well), but with EFS you have the option to implement stateless applications with minimal code changes.

- Works with a handy service like Elastic Beanstalk. Elastic Beanstalk can significantly simplify how you launch web environments. The good news is that you can use EFS in an Elastic Beanstalk environment very easily.

- Works with on-premise servers as well as EC2 instances. If you use AWS Direct Connect, you can mount an EFS file system to your on-prem servers. I don’t recommend this type of hybrid set up for serving web content, due to latency. But you can sure use EFS to store files that are accessed by on-prem applications.

- Built-in data consistency and file locking. Since you can have potentially many EC2 servers concurrently accessing the same files, you will want to have consistency and file locking. This is a hard problem to solve that you don’t have to worry about, since EFS takes care of it.

- Supports encryption at rest using AWS Key Management Service. If you need to encrypt the data stored in your EFS, it saves a lot of time to let AWS KMS take care of managing encryption keys for you.

- High availability. EFS gives you the data replication features you would expect from EBS, S3 or any other data storage solution in AWS. So, this is a big improvement over setting up and managing your own Network Attached Storage (NAS).

As you can see, there are a lot of advantages related to using AWS Elastic File System. If used properly, EFS is a VERY powerful AWS service. However, like I mentioned earlier, there are a lot of things you should be aware of.

So, here is what I’ve found can save you a LOT of headaches when using EFS:

Do load tests, early!

This is the first step for a smooth EFS implementation. Since the most common issues with web applications using EFS are performance-related, it is very important to measure performance as early as possible. You need to measure response times at the expected volume in Production for the most important scenarios in your web application.

I like Locust for executing load tests and I’ve built some tools to help me with the analysis of load test metrics. I typically get started with two scenarios:

- Single EC2 instance using Elastic Block Storage (EBS)

- Single EC2 instance Using EFS

Then, after I’m done with those two scenarios and any applicable optimizations, I add Auto Scaling and Elastic Load Balancer to the equation.

For this article, I set up a test WordPress site, preloaded with 500 posts and then I executed some load tests at 1 transaction per second. I launched my load generator in the same AWS region and AZ as the web servers, therefore response times have minimal latency due to network.

Here is what you can expect if you compare EBS performance vs. EFS, without doing any optimizations…

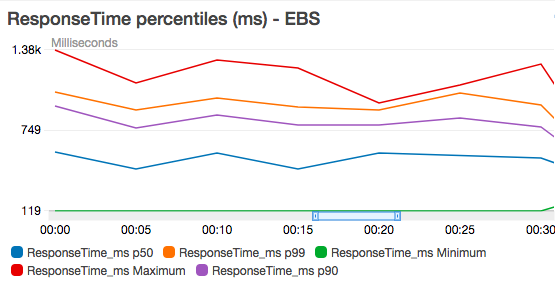

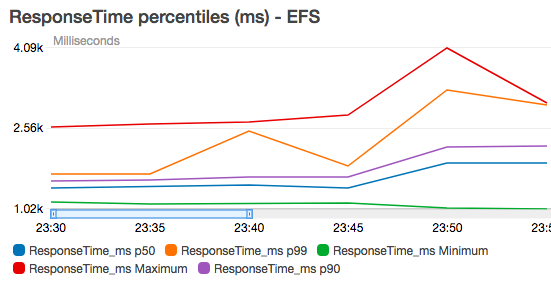

EBS, as expected, delivers a very decent minimum response time of ~119ms. The maximum response time was ~1.38s, and the site had a median of ~700 ms:

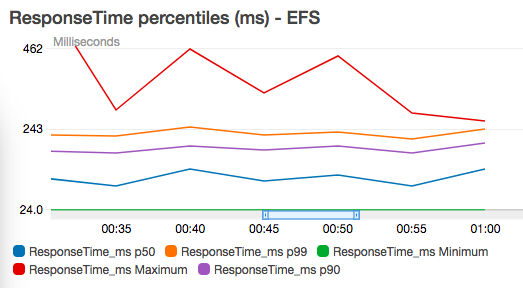

Now let’s take a look at EFS. Median response times were approximately 3x slower using EFS. EFS’ slowest response was twice as slow compared to EBS. The fastest response was ~8x slower using EFS, compared to EBS!

As these metrics show, it’s very likely to see out-of-the-box performance that is considerably slower with EFS, compared to block storage (EBS). Don’t get discouraged by this. In most situations, there’s some fine-tuning to do, so keep reading…

Analyze EFS metrics

After executing load tests, it is important to analyze EFS metrics. Here is a list of important metrics and some things you can do to improve performance:

- DataWriteIOBytes. Do you see a lot of write operations, when your application was supposed to do mostly reads? If this is the case, your application might be writing a lot of log entries or temporary files to the EFS (more on that below).

- DataReadIOBytes. In most cases, you also want to minimize the EFS read operations your application makes. This is the metric to watch after doing cache optimizations (more on that below, too).

- MetadataIOBytes. In my experience, this metric is usually very high compared to DataWriteIOBytes

and DataReadIOBytes. Sometimes 20x or 50x more, and I don’t have a good answer regarding why. What I’ve noticed

is that when performing operations such as

chmodor a bulk file upload, it often spikes considerably and it can reduce overall application performance. So, be careful when doing such operations. A high value in this metric indicates there is a high amount of back and forth between your web servers and the EFS, but not necessarily reading or writing files. - PercentIOLimit. EFS assigns an I/O value depending on your volume size (the bigger your volume, the more I/O you get). Therefore, this metric should remain as far from 100% as possible. If you see degradation in performance and this metric is at 100%, that could explain it.

- BurstCreditBalance. Metric related to PercentIOLimit, except that you want this metric to stay as far as possible from zero. Even after you use the allocated I/O, your application can still burst for a while, for a certain period of time. More on EFS throughput can be found here.

Make sure you’re using NFS 4.1 to mount your EFS and follow AWS recommended parameters.

This is an essential step. I can’t emphasize enough how important it is - ignore it and your application will likely suffer from poor performance.

Follow AWS official instructions. Make

sure you include the exact values AWS recommends for nfsvers, rsize, wsize, hard, timeo, retrans

or any other parameters AWS adds to its recommendations. Not doing so can result in very poor

performance.

Also, make sure async is enabled (which it is by default, but it’s good to double check anyways).

After mounting your EFS, you can execute: mount -l -t nfs4 and make sure everything is configured as recommended by AWS.

Keep in mind that least for now, EFS is not supported in Windows environments, only Linux.

Don’t write temporary files to the EFS

Remember, latency between your EC2 instances and the EFS is the one thing that can give you the most headaches when it comes to performance. Therefore, you want to minimize the number of round trips between your EC2 instances and the EFS.

If your application writes a lot of temporary files to the EFS, performance wil be poor. Check

metric DataWriteIOBytes - if you find it to be higher than expected (specially for scenarios

where you expect mostly reads) then your application might be writing a lot of temporary

files to the EFS.

Recommendation: write temporary files to your local file system (EBS) or keep them in memory.

Don’t write any logs to the EFS

Just like temporary files, logs have the potential to really increase latency and lower performance, especially if your application writes entries frequently.

Recommendation: Write log files to your local disk (EBS) and then export them to AWS using CloudWatch Logs. This is a good practice anyways, since it will simplify troubleshooting, it will make it easier to monitor application metrics using CloudWatch Metric Filters and you will keep a history of transactions, even after an EC2 instance is terminated.

Cache everything you can

Caching files will reduce the number of calls to the EFS. Options will vary depending on your application’s programming language or framework.

For example, by enabling opcache in my previous WordPress example, I was able to reduce

response times significantly (~5x for max response time, ~10x for median response time, ~40x for minimum response time!).

Recommendation: Since CloudWatch doesn’t publish memory utilization metrics, make sure you install the collectd CloudWatch plugin and monitor memory usage metrics, to make sure your system’s memory does not get overloaded.

If you can keep your code in the local file system, do it.

It’s true that having a clean separation between the location of the application code and other files is not always possible without making some code or configuration changes. Or maybe what you have in mind is a clean lift-and-shift migration to the cloud.

All I’m saying is that you think about it. In many cases it’s better to have code deployed in the local file system than having it in the EFS. This will reduce reads to the EFS and latency. It will also make it possible to execute blue/green deployments across your fleet of EC2 instances.

If you decide to have your code live in the EFS, then make sure you have some mechanism to cache bytecode (such as opcache in PHP).

Watch DataWriteIOBytes and DataReadIOBytes… do they make sense?

Is it OK to have a lot of write operations when most of the code is supposed to do reads? Are there a lot of reads after you’ve enabled caching? Any such discrepancies can mean your application is writing temporary or log files somewhere or that caching is not done properly. Any of these situations are going to negatively impact performance.

If you make changes to thousands of files at a time, be prepared to wait (minutes, even hours).

I’ve done operations where I had to upload thousands of files to an EFS. Such a process can

take hours, therefore it’s important to plan in advance and do some tests before executing

such an operation in a Production environment. Executing a chown command can also take a

long time, so just be prepared as you set up your Production environment. The same applies to

massive code deployments.

In theory, distributing file updates from multiple EC2 instances should mitigate this issue, but it’s not an easy thing to implement.

Use EC2 Auto Scaling

One of the things I really like about EFS is that it makes it really simple to use a key service such as Auto Scaling. Auto Scaling requires that your servers don’t store any data that is relevant to previous transactions (a.k.a. Stateless Applications).

In other words, your application has to be OK with servers being shut down or launched at any given time. Since EFS is a shared storage, any data stored in it does not live inside an EC2 instance, therefore it works really well with Auto Scaling. The good thing is that with EFS you don’t have to update your source code and call AWS APIs in order to implement applications that run on stateless servers.

That’s why using EFS and not taking advantage of Auto Scaling is a wasted opportunity.

There are two approaches to using Auto Scaling and EFS, and they both work well:

- Create an AMI where

/etc/fstabis preconfigured with an entry for the EFS. More details can be found here. - Mount the EFS using EC2 User Data, during EC2 instance launch.

Use CloudFront to serve static assets

CloudFront is AWS Content Delivery Network. You can use it to cache static content and to serve it from edge locations that are physically close to your end users, improving their experience while reducing load on your servers. Regardless of how you implement your web application, it is a good practice to use CloudFront to serve static content (i.e. Javascripts, CSS, static images, etc.).

But when you’re using EFS, serving this type of content through CloudFront is even more important, since latency will be reduced by minimizing the number of times your web servers fetch files from the EFS.

Ideally, your static assets will live in S3 and you will completely avoid serving them from the EFS. But if you can’t serve them from S3, using CloudFront will still reduce the number of reads from the EFS and likely increase performance of your web application.

Monitor your EFS throughput

Your EFS gets assigned a certain throughput (Bytes/second). This value is proportional to

the amount of data stored in it. The more data you have in the EFS, the more throughput you get.

In addition, the EFS has extra room for bursts in data transfer. You can check CloudWatch

metrics PermittedThroughput and BurstCreditBalance.

In most cases, the assigned throughput is enough. But if you need more, a quick trick is to create a big dummy file and copy it to the EFS. I know this is not ideal, but it works!

More on EFS performance can be found here.

Remove IAM permissions that can lead to deleting the EFS accidentally

I’m paranoid when it comes to accidentally deleting things. Therefore I always make sure that once I have certain resources up and running in Production, it’s really hard to accidentally delete them. Especially something as vital as your application’s central file storage, such as an Elastic File System.

So, even if AWS replicates data across different Availability Zones, this won’t help you in the event of human error or accidental deletion.

My recommendation is to make sure that the following IAM entities have an Effect:Deny statement

that applies to the elasticfilesystem:DeleteFileSystem operation, for your Production EFS:

- IAM Users

- IAM Groups

- IAM Roles

And of course, don’t update anything with your AWS root user (which you shouldn’t be doing anyways)

Back up your files and be ready to restore your EFS.

Unlike Relational Database Service (RDS) or Elastic Block Storage (EBS), EFS doesn’t have a built-in way to create snapshots. At least not right now. But you’ll still want to have a way to create copies of your EFS and potentially use them to restore your EFS to a particular point in time.

Thankfully, AWS has published two ways in which you can do that:

Conclusions

- Elastic File Service is a very suitable option for migrating web applications to AWS, or to launch web applications from scratch in AWS.

- Pros include: automatic storage elasticity, potential cost savings by eliminating storage over-provisioning, minimal to zero code changes in existing applications, minimal AWS vendor lock-in, plays well with an essential service such as Auto Scaling, plays well with Elastic Beanstalk, high availability, supports encryption at rest using KMS, it has built-in data consistency as well as file locking.

- Cons include: it’s easy to run into performance issues due to sub-optimal configuration or application behavior, EFS is only supported in Linux, there is no AWS built-in backup mechanism (you have to install and operate your own), updates to thousands of files can take a long time (hours in many cases).

- Main lessons learned:

- Make sure you follow AWS guidelines for mounting EFS volumes.

- Do load tests as early as possible. This is even more important than usual when you work with EFS, since the issues most customers experience are performance-related. This tool might help you with load test metric analysis.

- Cache everything you can in your application: bytecode, static assets, temporary files.

- Identify and avoid unnecessary reads and writes to the EFS, such as temporary files and logs. If you must write these types of files to disk, make sure they’re written to the local file system or block storage (EBS) and not the EFS. Export logs to CloudWatch Logs.

- If you need more throughput, create large dummy files and store them in the EFS (I know, this is not ideal, but it works).

- Use CloudFront to serve static assets, ideally from S3. But even if they’re served from the EFS, CloudFront will reduce the number of reads from the EFS and improve performance significantly.

I hope you found this helpful…

Do you need help migrating your web applications to AWS?

Are you planning to migrate to AWS, but are worried about vendor lock-in? Or want to take advantage of a key service like Auto Scaling without re-designing your applications? Or you simply want to move to the cloud?

I can certainly help and save you a lot of headaches. Just click on the button below to schedule a free consultation or use the contact form.