First off, I have to say I find AWS Lambda an extremely useful way to build application components in an agile and reliable way. AWS Lambda is a service that allows you to upload code to the cloud and run it without having to maintain its underlying infrastructure. You pay for the number of executions as well as consumed resources, such as memory and compute time per execution.

AWS Lambda allows development teams to quickly build, integrate and test application components. When I have to write new application code, AWS Lambda is often my first option. That being said, there are some challenges when it comes to promoting code across different stages (or environments, however you want to call them). The typical flow includes first deploying code to a development stage and once it looks good move it to a place where it can be tested properly (QA or UAT stages), and when it’s ready move it to a customer-facing production environment.

The problem

How can I keep configurations, cloud resources and application logic separate (in a secure and reliable way) when I promote my function code across stages?

For example, I have a Lambda function that fetches a file from S3, does some processing and publishes a message to an SNS topic. In this scenario, there’s a need to have separate S3 buckets and SNS topics in DEV, TEST and PROD environments (test files shouldn’t be placed in a production bucket or dev messages shouldn’t be sent to a test SNS topic). Also, when a Lambda function is promoted from TEST to PROD, we should ensure the same code version that has been tested and validated is promoted to production. Security is also a key area to implement when transitioning code and configurations from development to production environments.

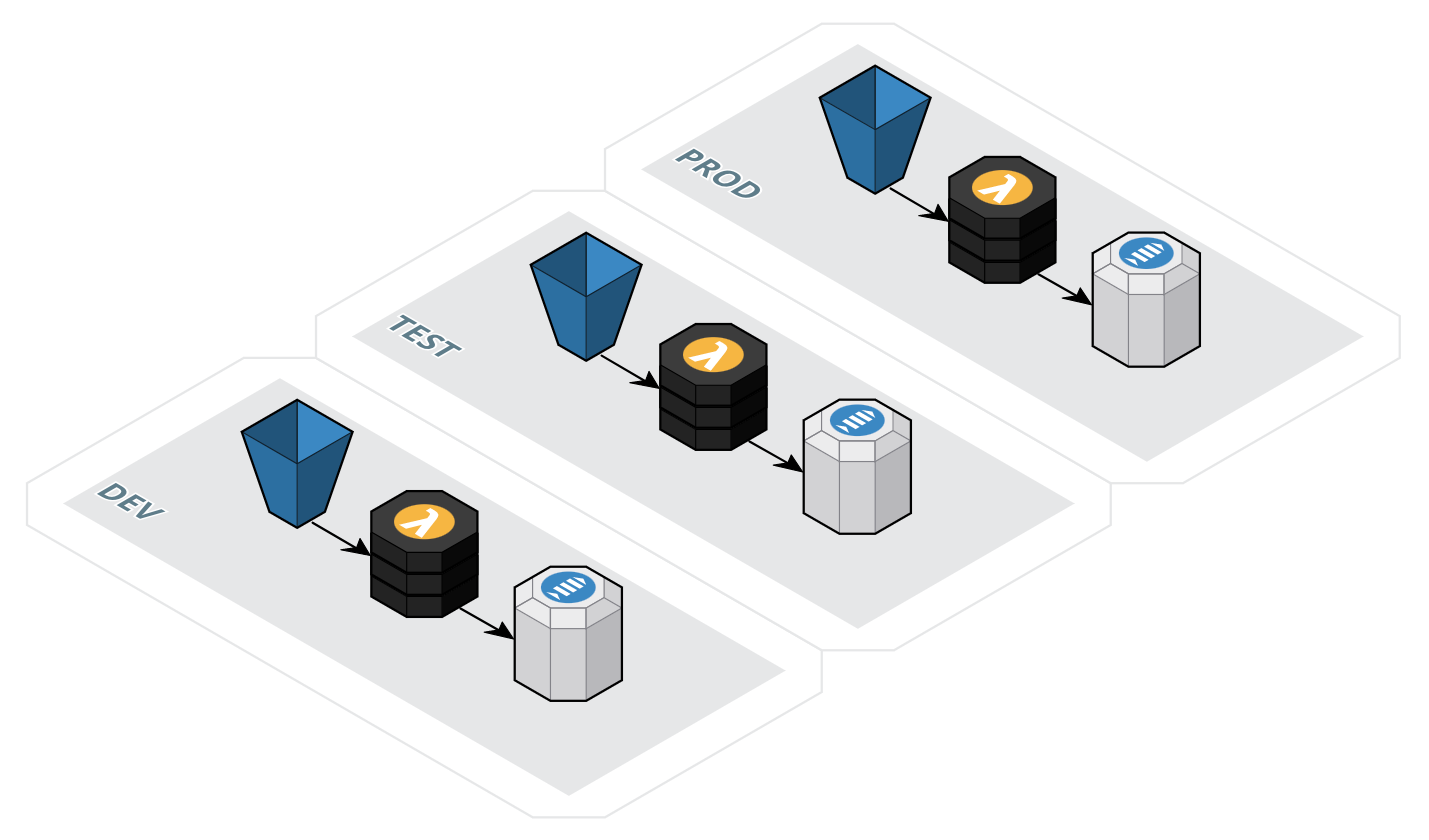

Our hypothetical app would look like this:

Solutions

In the server world this problem is often solved using environment variables and configuration files. Thankfully, in the AWS Lambda serverless context, we can also release code across multiple stages using features such as: versions, aliases, stacks, environment variables, configuration management tools (e.g. Secrets Manager, SSM Parameter Store), data storage services (i.e. S3, DynamoDB) and deployment automation tools such as CodePipeline, CodeBuild and the Serverless Application Model framework. Many of these solutions can be applied to both server-based and serverless applications.

So, let’s get started with some important principles…

Application vs. Infrastructure-level configurations

It’s important to distinguish between these two types of configurations: Application and Infrastructure. While application-level configurations are accessed directly by the application code, infrastructure-level configurations are accessed when launching and configuring cloud resources relevant to the application.

As with most software components, Lambda functions need to access application-level configurations that are specific to each stage, such as endpoint addresses for external APIs or databases, API keys to access external systems or authentication credentials, just to name a few. Some examples of infrastructure configurations are: RDS DB instance class, memory allocation or Provisioned Concurrency for a particular Lambda function, Security Groups, VPC configurations, etc.

When it comes to application-level configurations, there are three main ways to make them available to the application: 1) read values from Environment Variables, 2) package configuration files with these values, together with the function’s source code, 3) retrieve values at runtime from an external source. Infrastructure-level configurations can be set as parameters when launching AWS resources or retrieved during the build process, even maintained directly in templates when using Infrastructure as Code tools, such as CloudFormation (a recommended best-practice for maintaining infrastructure components).

Both application and infrastructure configurations vary according to stages. For example, the database size for a development environment will likely be smaller compared to the one in production, the development environment code will need to access a different database or external APIs compared to the production deployment, etc.

Keep AWS resources isolated: group all components relevant to each stage in a separate stack (preferably use CloudFormation and Serverless Application Model - SAM)

A key principle for a seamless code promotion across stages is to have full stage-specific isolation regarding code, configurations and cloud resources (e.g. Lambda functions, S3 buckets, RDS databases, SNS topics, SQS queues, etc.). The way to do this is to group all these components as a single unit that will be isolated and maintained in separate stages. This means that all application components will have to be replicated multiple times. Doing so manually would introduce a high risk of inconsistencies across environments and unnecessary additional effort to launch and maintain an application in multiple places.

This is why it’s essential to launch all components using cloud provisioning tools. The one I recommend is AWS Serverless Application Model (SAM). This framework will allow developers to define all serverless (and other cloud resources) and quickly deploy them as separate environments according to a specific stage.

The AWS Serverless Application Model launches a CloudFormation stack that groups all the resources defined in the SAM template (i.e. S3 buckets, Lambda functions, SNS topics, etc.). Each environment has its own isolated stack and given that CloudFormation delivers resource provisioning automation, launching and maintaining one stack per stage is not an extremely time-consuming task (as opposed to launching resources manually).

This will allow your team to isolate components and set configurations across different stages in an efficient way.

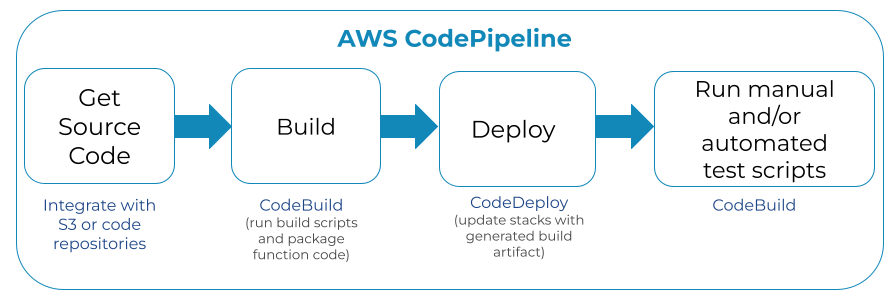

Automate code builds and deployments using CodePipeline, CodeBuild and CodeDeploy

AWS offers a wide set of services for implementing CI/CD workflows. The AWS CodePipeline service orchestrates all the steps required in a deployment automation scenario, starting with fetching source code (i.e. integrations with code repositories) and any subsequent build and deployment steps. CodeBuild delivers compute infrastructure to run build tasks and create deployment artifacts. CodeDeploy integrates with a number of AWS services to coordinate the deployment of application code packages, typically generated by CodeBuild, or use outputs from other custom tools.

Important advantages of separating code and configuration files:

The objective of implementing the solutions mentioned in this article is to deliver more reliable applications to end users and to simplify the management of Lambda functions. Below are some important advantages to expect:

- The same function code gets deployed across stages, which simplifies application development.

- The fact that code is not updated when it gets promoted across stages increases application reliability when releasing new functionality.

- This approach sets the foundation for consistent and reliable deployment automation processes. For example, every time new code is pushed to a git repository, an automated CI/CD pipeline can be triggered. Automation delivers consistent results that deliver more stable applications.

- Once we define the values for our configuration files across stages, they shouldn’t change often and the team can focus on application development activities. Having configurations separate from actual coding activities also reduces the possibility of human error that could cause disruptions due to misconfigurations.

- Code will be more reliable as it navigates throughout DEV, TEST and PROD since it doesn’t have any stage-specific logic in it.

- Security is also improved, since sensitive configuration values can be restricted to certain users and are not exposed in the source code.

Use AWS services to safely store and retrieve stage-specific configurations

AWS offers a set of services that can be used to manage configurations across stages. Below are some I recommend:

Secrets Manager

AWS Secrets Manager is a service designed to store and manage sensitive information, such as authentication credentials or API keys. The information in Secrets Manager is stored encrypted by KMS and it allows fine-grained access through the use of IAM policies. Secrets Manager interacts with other services, such as RDS and Redshift, to manage their access credentials. It allows application owners to safely trigger credential rotation on demand or using a schedule.

Before making your application retrieve sensitive data from Secrets Manager, it’s important to calculate pricing for high volume data requests, since there are situations where it’s not cost or performance effective to retrieve configurations on each Lambda execution. The service charges $0.05 per 10,000 API calls. The table below shows examples where each function execution results in one API call to Secrets Manager:

| Average Lambda executions per second | Approx. Secrets Manager API calls per month | Price |

|---|---|---|

| 1 execution per second | 2.6 Million | $13 USD/month |

| 10 executions per second | 26 Million | $130 USD/month |

| 100 executions per second | 260 Million | $1,300 USD/month |

It’s important to note that it’s not completely uncommon to see AWS accounts with 100 or even 1,000 Lambda executions per second. If not done properly, this could result in approximately $13,000 USD/month just in API calls to Secrets Manager! Thankfully, Secrets Manager pricing can be optimized by caching the values retrieved from Secrets Manager and storing them as global variables in the function’s code and reducing the number of API calls to this service. Lambda code can be implemented in a way that stores and caches these values outside the function handler, which would optimize calls to Secrets Manager.

There are tools published by AWS, such as the AWS Parameters and Secrets Manager Lambda Extension to optimize the retrieval and caching of secrets in your Lambda function executions. If you’re implementing a solution that could potentially result in a high number of secrets stored in Secrets Manager, it’s also important to calculate pricing. At $0.40 per month for a single secret, an application that results in thousands of stored secrets can quickly result in hundreds or even thousands of dollars every month just in secret storage fees, not counting API calls.

AWS Systems Manager (SSM) Parameter Store

SSM Parameter Store is a secure alternative to Secrets Manager. Similar to Secrets Manager, it allows the storage and management of sensitive data in AWS, in an encrypted and secure way. One key difference with Secrets Manager is that Parameter Store doesn’t manage credential rotation with other AWS resources (i.e. RDS databases, Redshift clusters, etc.).

Parameter Store offers two types of parameters: Standard and Advanced. The Advanced type allows parameters of a higher size compared to Standard (8KB vs. 4KB) and the possibility to create more parameters (100,000 vs. 10,000). However, there’s an important difference in pricing if you’re planning to create a high number of parameters for your application: Advanced costs $0.05 per parameter, while Standard doesn’t charge per stored parameter.

Pricing for API calls to Parameter Store is the same as Secrets Manager ($0.05 per 10,000 calls), therefore the table shown in the Secrets Manager section is equally applicable to both services. The same optimizations regarding caching parameters and using the AWS Parameters and Secrets Manager Lambda Extension apply when using SSM Parameter Store.

Store configuration files in S3

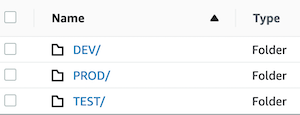

Even though Secrets Manager and SSM Parameter Store are the recommended solutions for storing and managing configuration files, S3 is also an option. One approach is to create a bucket that stores an application’s configuration values and then create one folder per stage: DEV, TEST, PROD.

Inside each folder, we can store a stage-specific file named env-config.yml , which we can copy to the code package during the build process. For example:

/DEV/env-config.yml

s3bucket: mydevbucket

snstopic: mydevtopic

/TEST/env-config.yml

s3bucket: mytestbucket

snstopic: mytesttopic

/PROD/env-config.yml

s3bucket: myprodbucket

snstopic: myprodtopic

The build process can then copy the relevant configuration file to the applicable deployment package, which will make the stage-specific configuration available to the function’s code. These values stored in S3 can also be used to configure infrastructure components according to each stage (i.e. RDS DB instance size, Lambda memory allocation, etc.), during the deployment process.

General guidelines when storing configuration files in AWS services

Like mentioned earlier, in order to optimize cost and latency, it’s important to reduce the number of times these values get retrieved by a Lambda function. If possible, avoiding data retrieval at runtime is the preferred option. If data must be retrieved at runtime, then optimizing the number of requests by caching or using a tool like the “AWS Parameters and Secrets Manager Lambda Extension” is recommended.

The preferred approach for retrieving stage-specific configuration values stored in these services is during the Lambda build process. The build scripts (preferably executed by CodeBuild) can fetch stage-specific configuration values and store them in the code deployment package of a Lambda function, as internal configuration files separate from the application logic. The build process can also use these values to define Lambda Environment Variables (more on that below) when building the deployment package.

If using S3, enabling S3 Versioning is important, since it allows to quickly roll back any issues caused by the latest version, by triggering a new code build and deployment. Secrets Manager and SSM Parameter Store also support a versioning feature. In the case of Secrets Manager, each new value has to be explicitly tagged with a version name in order to keep version history and for Parameter Store, versions are stored automatically.

It’s also important to follow a consistent naming pattern across the full application when storing configurations in any of these services, to allow for easier automation and troubleshooting. For example: /<application-name>/<stage>/<configuration-name>

Security is always an important area to consider. The best practice would be to keep two separate AWS accounts for pre-production and production environments and provide limited developer access to the production account. Access to any configuration values with sensitive data (i.e. database credentials, API keys, etc.) must be restricted with proper IAM permissions.

Using Lambda Environment Variables

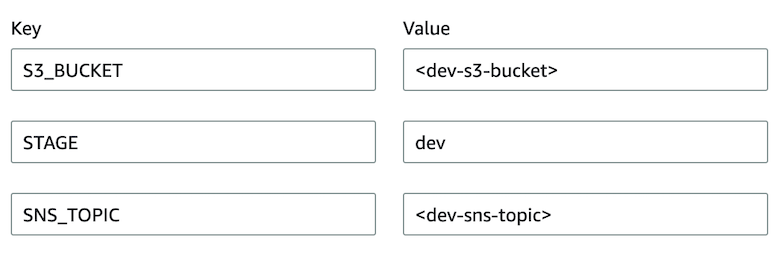

A key feature in AWS Lambda is Environment Variables. They are key/value pairs that get configured in a function. You can set Environment Variables using the AWS Management console, CLI, SDK or in CloudFormation templates, as well as the Serverless Application Model.

The recommendation is to assign Environment Variables in a way that is specific to a particular stage, which means the values of these variables will change according to where a particular Lambda function is deployed. Below is one example of environment variables, which in this case are relevant to the DEV stage:

My recommended approach is to declare Environment Variables in the CloudFormation or SAM template and assign stage-specific values during the build and deployment steps and when initially launching the stack. They can be set as Parameters in the CloudFormation stack or be fetched from S3, SSM Parameter Store or Secrets Manager when updating the CloudFormation stack.

For example, below is the SAM template syntax that retrieves a value from Secrets Manager and sets the Environment Variable value with it:

Environment:

Variables:

SNS_TOPIC: !Sub "{{resolve:secretsmanager:${SnsSecretName}::topic}}"

In this case, SnsSecretName is a parameter that is configured in the CloudFormation stack and that points to the name of the secret in Secrets Manager. topic is the name of the field configured in Secrets Manager to store the value of the SNS TOPIC. When the stack is launched or updated, this value is retrieved from Secrets Manager to configure the specific Lambda function.

Below is an example where the value is retrieved from SSM Parameter Store:

Environment:

Variables:

SNS_TOPIC: !Join [ '', ['{{resolve:ssm:/', !Ref SnsParamName, ':', !Ref SnsParamVersion, '}}']]

SnsParamName contains the name of the parameter in SSM Parameter Store. In this case it’s necessary to specify a version for the parameter that will be retrieved, which is defined by SnsParamVersion.

One useful feature of SAM templates is the ability to define values for specific Environment Variables as a global configuration that affects all functions defined in that template. This simplifies the configuration process in situations where multiple functions need to access common variables. Since this feature allows a particular Environment Variable to be only defined once, it makes configuration much easier. Here is one example:

Globals:

Function:

Environment:

Variables:

ENV_VARIABLE_1: <value>

Once configured, Environment Variables can be accessed by the application code, seamlessly and with zero latency. Here’s an example of how to retrieve an Environment Variable in NodeJS:

let region = process.env.STAGE

It’s not recommended to store sensitive values in Environment Variables, since they can become visible to anyone with access to the Lambda console. For sensitive values, it’s preferred to use Environment Variables to only store a reference to the location of these values and then make the function code retrieve those values using the AWS API and caching those values as much as possible, in order to optimize cost and latency. If sensitive values must be configured in Environment Variables, it’s highly recommended to encrypt those values using the KMS service, which will improve security and further restrict which IAM entities have access to the values configured in encrypted environment variables.

Given that Environment Variables can be accessed by all Lambda executions for that particular function, it’s important to avoid configuring values that could potentially vary based on certain conditions specific to a particular execution.

Packaging configuration files in the code deployment package

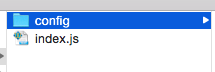

The most common way to deploy a Lambda function’s code is to create a deployment package. Inside this package we can store a stage-specific configuration file, which will be accessed by the function. For example, in the following folder structure:

Under the config folder we can place a file called env-config.yml that varies according to the function’s stage.

This is what the DEV file would look like:

s3bucket: mydevbucket

snstopic: mydevtopic

TEST would look like this:

s3bucket: mytestbucket

snstopic: myteststagetopic

… and PROD:

s3bucket: myprodbucket

snstopic: myprodtopic

The recommendation is to place this configuration file in the code package during the build process, according to each stage. It’s important to avoid storing this configuration file in the code repository, so we can have separation between code and configurations and also to avoid exposure of production values during development. This configuration file can be stored in S3, SSM Parameter Store or Secrets Manager (which are covered earlier in this article), then the file can be fetched at build time and added to the code deployment package.

Below is a code snippet showing an example of how to fetch the contents of this file. For performance reasons, this code should preferably be placed outside of the Lambda function’s handler, to ensure the fetched values are available to multiple Lambda executions:

const fs = require("fs");

const yaml = require('js-yaml');

const config_file = fs.readFileSync('./config/env-config.yml','utf8');

const env_config = yaml.load(config_file);

const MY_S3_BUCKET = env_config.s3bucket

const MY_SNS_TOPIC = env_config.snstopic

One advantage of this approach is performance, since the Lambda function doesn’t have to fetch configurations from an external source, such as S3, Parameter Store or Secrets Manager. I’ve executed load tests that showed latency optimizations in the 50ms-100ms range when fetching configurations locally, compared to external sources.

Something to keep in mind is that if you have multiple functions that access the same configuration values, you will have to update all functions when these values change. For example, you have 10 functions that use the same S3 TEST bucket and you have to use a different bucket for some reason (or change the location of the config files in the same bucket). In this case, you’ll end up updating and deploying 10 function packages, which is one reason to implement build and deployment automation solutions from day-1.

Reduce deployment risk by using Versions and Aliases in Lambda functions - together with Blue/Green deployments

A Version in AWS Lambda is essentially a snapshot of your application code and configurations. When you deploy your function’s code, you can also publish a new version. Once published, a version is an immutable snapshot of a Lambda function and it gets assigned a sequential number. Lambda also assigns a version tag to the latest set of code and configurations, which uses the reserved name $LATEST.

Over time, your application would have multiple versions (or snapshots) of Lambda functions. This brings us to aliases. An Alias is a pointer to a particular version of your function. You can assign descriptive names to your function aliases and control which version they point to. For example, you can create a NEW-RELEASE alias that points to version $LATEST and a STABLE-RELEASE alias that points to a previous, stable version.

When creating a new alias using the Lambda console, CLI or SDK, you can specify a Weight configuration, which is a percentage that specifies the portion of executions that a particular alias will serve. This is a useful feature that decreases risk when releasing new code/configurations and delivers the ability to monitor, mitigate and rollback any potential disruptions caused by a new deployed version.

If using SAM, you can configure conditions that automatically trigger a deployment rollback, such as CloudWatch Alarms or even invoke a separate Lambda function that could perform validation logic to ensure the new version behaves as expected. The Serverless Application Model supports the DeploymentPreference configuration, which seamlessly implements automated Blue/Green deployment configurations when new code and/or configurations get applied to a Lambda function.

Versions and aliases are definitely a feature worth considering in order to keep track of the update history in a particular Lambda function. They also allow the implementation of useful features such as Blue Green Deployments, which deliver flexibility over how fast a new update will be released and automate the conditions that will make it roll back or complete successfully. This will increase application reliability and improve the user experience when new code or configurations are released.

To summarize:

- Separating code and configuration is a problem applicable to Lambda functions, similar to other types of server-based application components.

- Using AWS tools such as CloudFormation, Serverless Application Model (SAM), CodePipeline, CodeBuild and CodeDeploy is an essential aspect when launching and maintaining reliable Serverless applications.

- AWS offers a set of solutions that help with seamlessly promoting code and configurations across multiple environments and stages. S3, SSM Parameter Store and Secrets Manager are solutions that should be evaluated for this purpose. Using parameters in CloudFormation stacks is also a viable solution for many configurations.

- Security is an essential consideration, particularly when it comes to production deployments.

- Automation is particularly important in a Serverless deployment strategy due to the high number of cloud resources that typically need to be launched, configured and maintained in this type of architecture.

- AWS cost must be evaluated for each configuration management and retrieval solution under consideration. This is particularly important for high volume applications, which can result in thousands of dollars just related to configuration management when the right solution is not implemented.

Are you considering using a serverless architecture, or already have one running?

I have many years of experience working with Lambda functions and other serverless components, including high volume applications. I can certainly help you with designing, implementing and optimizing your serverless components. Just click on the Schedule Consultation button below and I’ll be happy to have a chat with you.