One of the most common problems AWS customers face is the dreaded “Bad AWS Billing Surprise”. It’s the beginning of the month, you’ve just received your latest AWS bill and found out that you owe AWS a completely unexpected amount of money (typically a large sum, hundreds or thousands of dollars above what you originally expected, depending on your budget). There are also situations where you regularly spend far more than you should over an extended period of time, which can be equally as expensive.

If you’re in either of these situations, a critical question is:

How do you reduce your AWS cost in a responsible way, without putting at risk your applications and the business transactions they support?

There’s no easy answer to this problem. That’s why in this article I’ll walk you through a proven process I’ve put together in order to reduce AWS cost…

Some important principles of AWS cost reduction

Before you start terminating EC2 instances, spending money on Reserved purchases or making any changes to your AWS infrastructure, keep in mind these important principles:

- Be patient. A full cost optimization cycle can easily take 3 months or longer. There will be Quick Wins, but getting to a final goal can be an iterative process throughout multiple AWS billing cycles.

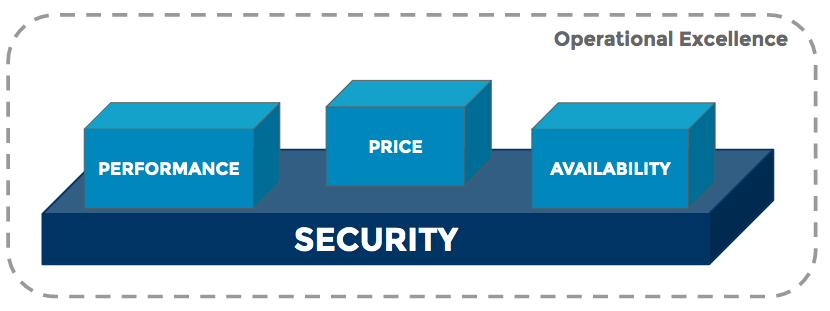

- You have to find the right balance. Based on your application needs, you have to make sure you’re paying for the right amount of Performance, Availability and Operational Excellence that your business requires. Security should be the last area -if at all- to consider when it comes to cost reductions (in most cases, the greatest portion of cost is related to performance and availability anyways).

- **Cost reductions need to be prioritized (some are not even worth the effort)**. Implementing infrastructure updates can be risky and it can take precious time and effort away from you and your team. Sometimes it happens that the engineering time required to optimize a certain area of your AWS infrastructure is far more expensive than the actual benefits. Calculating whether you should go ahead with a cost reduction project should include the expected savings vs. both the actual engineering hours and the cost of opportunity (i.e. not focusing on building new features due to some cost reduction tasks). For example, spending $5,000 in engineering time for a $200/month reduction in your AWS bill might not be worth it. I’ll cover this area in more detail below, so keep reading.

With these principles in mind, we can continue with the actual steps…

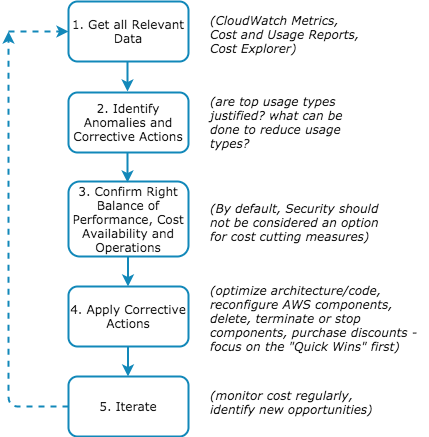

Step 1 - Get All Relevant Data

The first thing you need to do is get all cost data and relevant system metrics in front of you, so you can find cost-related inefficiencies.

Below are some important tools that can help you find relevant data:

- AWS Cost and Usage Reports. These are detailed reports that AWS creates 2-3 times per day and are stored in an S3 bucket that you define. Data can be as granular as 1 hour and they have all the relevant usage information you’ll need. The downside is that these reports can easily turn into many thousands of records, therefore you need to have a scalable way to analyze them. Check out this article I wrote, which describes how to use AWS Athena to analyze Cost and Usage Reports and find your main usage types and resources by cost. AWS Athena is a great tool to drill down on your AWS Cost and Usage data and find areas to optimize.

- AWS Cost Explorer. This is a graphic interface in the AWS console that allows you to visualize relevant items that contribute to your AWS cost, such as top services, usage types, API operations and others. Even though Cost Explorer is readily available and it’s easy to use, querying Cost and Usage reports can be a much more effective way to find key areas that contribute to AWS cost. For example, Cost Explorer doesn’t give you a full view of AWS resources (EC2 instances, RDS DB instances, S3 buckets, etc.) and the usage types each resource consumes, which is an essential step for getting to the bottom of many cost-related issues.

- CloudWatch Metrics. Once the top resources by cost are identified, sometimes it’s necessary to look at the actual system metrics for specific AWS components. That’s where a service like CloudWatch is essential in order to identify areas for cost optimizations, such as idle or under-utilized resources. CloudWatch is also important when making informed cost optimization decisions that could potentially affect performance or availability.

- AWS Trusted Advisor. This AWS service executes periodic checks on your AWS infrastructure and comes up with a list of recommendations. There is a section for Cost Optimizations, which typically contains useful findings, such as idle or under-utilized resources, among others. In order to access these findings, you have to enable AWS Support, which has a cost depending on the plan you choose (e.g. Developer, Business or Enterprise).

- AWS Compute Optimizer. This AWS feature creates an automated report that analyzes compute resources in your account and comes up with recommendations for EC2 usage optimizations.

I also recommend installing MiserBot, a Slack and email bot I developed, which gives you daily updates on your AWS cost and a detailed view of where your money is going.

Now that you know where to find important cost data, it’s time to look for specific information…

Where’s the money going?

The first step is to understand the areas that result in the most AWS cost, and that you also identify their percentage relative to your total AWS bill.

There are three important dimensions to analyze:

A. Top AWS Usage Types by Cost

The first meaningful step is to understand the AWS usage types that incur in the most money spent. Some common examples:

BoxUsage. Anything in this category refers to an On Demand EC2 instance type. As we’ll cover below, On Demand EC2 usage can be reduced by purchasing EC2 Reserved instances or AWS Savings Plans.TimedStorage. S3 storage cost starts with this label and there are some variations depending on the storage class. Cost can be reduced by allocating objects to a specific storage class, such as Infrequent Access, depending on your application’s needs for availability or access to specific objects in S3.InstanceUsage. On Demand RDS DB compute time. Similarly to EC2, On Demand RDS usage can be optimized by purchasing Reserved RDS instances.HeavyUsage. EC2 and RDS Reserved instances. Usage in this category represents Reserved purchases in your account. It’s important to keep track of these purchases and make sure they’re being used properly. It’s not uncommon to see cases where customers don’t take advantage of Reserved discounts available in their AWS accounts.DataTransfer-Out. This is data transferred out to the internet. For example, from EC2 instances, Load Balancers or CloudFront distributions. In some applications -such as high traffic or media sites- this can represent a top percentage of money spent.

Using the tools described in this article, you can run the following Athena query:

SELECT lineitem_productcode, lineItem_UsageType,

round(sum(cast(lineitem_unblendedcost AS double)),2) AS sum_unblendedcost

FROM billing.hourly

WHERE period='<period>'

GROUP BY lineitem_productcode, lineItem_UsageType

ORDER BY sum_unblendedcost DESC

Once you have the top usage types, you’ll have a good starting point to uncover areas that are impacting your AWS bill. For example:

- Why are you spending a particular amount of money on a specific EC2 instance type?

- Identify anomalies, such as a large RDS or EC2 instance type that you weren’t aware of.

B. Top AWS Resources by Cost

This is a list of the top AWS resources, such as specific EC2 instances, RDS DB instances, S3 buckets, CloudFront distributions, etc. This will give you a detailed view of the most expensive AWS components in your application. Once you have this list, focus on the top 5-10 and also on those that are a considerable expense for your budget.

Using Athena, you can run the following query:

SELECT lineitem_productCode,

lineitem_resourceId,

sum(cast(lineitem_unblendedcost AS double)) AS sum_unblendedcost

FROM billing.hourly

WHERE period='<period>'

GROUP BY lineitem_productCode,lineitem_resourceId

ORDER BY sum_unblendedcost desc

LIMIT 100

Keep in mind, this is not information you can find in AWS Cost Explorer, that’s why I recommend using Athena to analyze Cost and Usage reports.

C. Find usage types for the top AWS resources

Once you find the top AWS resources by cost, it’s time to drill down on each component and see what’s costing your applications the most money. For example:

- You have an expensive CloudFront distribution. Are you paying a lot of money for

Requests,DataTransferor both? - You have an expensive EC2 instance. Are you paying for

BoxUsage(compute time),DataTransfer-Out(internet) orDataTransfer-Regional(cross Availability Zones)? - You have an expensive RDS DB instance. Are you paying for

InstanceUsage(compute time),StorageIOUsage(disk I/O operations) orStorage(disk storage)? - You have an expensive S3 bucket. Are you paying for

Requests(API calls, billed by usage tier),DataTransfer-Out(data transfer out to the internet),TimedStorage(storage, you should see if it’s Standard, Standard Infrequent Access, One-Zone Infrequent Access, Reduced Redundancy, Glacier, Glacier Deep Archive).

This is my recommended Athena query to find the usage types for a particular AWS resource:

SELECT DISTINCT lineitem_usagetype, sum(cast(lineitem_usageamount AS double)) AS sum_usageamount,

sum(cast(lineitem_unblendedcost AS double)) AS sum_unblendedcost

FROM billing.hourly

WHERE lineitem_resourceId = '<resourceId>'

AND period='<period>'

GROUP BY lineitem_usagetype

ORDER BY sum_unblendedcost DESC

Once you know exactly which resources consume the most amount of money and their usage types, then you are in a position to take some corrective actions. Similarly to finding the top AWS resources by cost, this information is not available in AWS Cost Explorer, only in Cost and Usage Reports.

Step 2 - Identify Anomalies and Corrective Actions

Once you have relevant usage data from the first step, here are two questions that need to be asked per each top cost item:

- Are top AWS usage items justified?

- What can be done to reduce top usage items?

The important part in this step is to identify potential corrective actions, which will then be evaluated, prioritized and potentially executed in the following steps.

Below are some common costly items:

EC2 Compute

In my experience, EC2 is almost always one of the top areas in AWS bills. If EC2 BoxUsage (On Demand EC2) is among your top AWS cost items, I recommend looking into the following areas:

- Find idle EC2 instances. Check CloudWatch metrics for all instances within the affected EC2 instance type (i.e. c5.large, t3.large, etc.) and confirm if they’re being used or not.

- Find over-provisioned EC2 instances. A common area to optimize is over-provisioned EC2 instances. Sometimes it’s common to assign unnecessary compute capacity to certain application components. Some examples would be having a t3.xlarge for a workload that can be safely handled by a t3.large or t3.medium, or having an EC2 Auto Scaling Group with more instances than required.

A quick way to do this is to go to the CloudWatch Metrics console and select CPUUtilization for all instances in your account. You’ll see a graph including all EC2 instances in your account and how high (or low) CPU they consume. Any EC2 instances with <5% CPU Utilization over a long period of time (i.e. 2 weeks, 1 month) are clearly not used efficiently, and should be candidates for down-sizing or termination.

If EC2 HeavyUsage (Reserved EC2) is a top usage type, then look into Reserved Instance Utilization Reports in the AWS Billing console. If reports show that Reserved discounts are not applied effectively in your AWS account, then one option might be to convert applicable On Demand instances to the EC2 instance type covered by already purchased Reserved instances. If this isn’t possible, a last resort option might be to sell unused reservations in the Amazon EC2 Reserved Instance Marketplace. You might not get the same amount you originally paid, but if you’re sure you don’t need those reserved EC2 instances, at least you’ll get a portion of the original amount and reduce your AWS bill.

RDS Compute

Relational databases are still very popular in many applications; therefore a service like RDS is commonly seen among the top AWS usage types. Even though users pay for data storage and I/O in RDS, compute is in most cases the top cost item. InstanceUsage is the usage type for On Demand RDS instances, while HeavyUsage is for Reserved RDS instances.

If RDS is a top usage item, then you can apply similar strategies as in EC2: find idle and over-provisioned RDS DB instances and find under-utilized reservations. From the CloudWatch Metrics console, view the DatabaseConnections and CPUUtilization metrics for all databases in your account over a 2-4 week period. From my experience, it’s not uncommon to see databases with 0 connections, which are obvious candidates for termination.

Regarding under-utilized reserved RDS instances, one key difference compared to EC2 is that you can’t sell RDS reservations, as there’s no marketplace to do so. You can find more information on RDS Reserved in this article.

Data Transfer

There are two types of Data Transfer that can cost you a lot of money: 1) DataTransfer-Regional and 2) DataTransfer-Out.

Regional Data Transfer happens within the same AWS region, but across Availability Zones. It’s a common usage type in applications that replicate or access large amounts of data stored across multiple Availability Zones. One way to reduce it is to deploy EC2 instances in a single AZ, which has the downside of reducing resiliency in case of AZ failure. In this case it’s very important to evaluate your application’s availability requirements and whether you’re spending a considerable amount of money in this usage type. If Regional Data Transfer is a top usage type, then it comes down to determining how much money you’re willing to spend for multi-AZ redundancy.

Data Transfer Out is data transferred out to the internet and it’s typically incurred by services such as CloudFront, S3 and EC2. If you have an application that serves heavy media content or that has high traffic, then you’re likely to spend some money in this usage type. One way to reduce it is to enable compression in CloudFront as well as web servers. I’ve seen cases where unnecessary JSON data can be eliminated from responses and reduce this cost significantly. As well, simply optimizing image or video size can have a big impact in this area.

S3 Storage

S3 TimedStorage tells you how much money you’re spending on S3 storage. S3 offers different storage classes, such as Standard, Standard Infrequent Access, One Zone Infrequent Access, Intelligent Tiering, Glacier and Glacier Deep Archive.

This usage type is measured in GB/month and choosing the right storage class depends on access patterns for each object as well as availability requirements. Standard is the most expensive storage class and it’s common to find opportunities to reduce its cost by choosing the right storage class or enabling features such as Object Lifecycle Management

Others

Given the large number of AWS services, there are a lot of usage patterns that can result in unnecessarily high cost, depending on the nature of your applications. In this article I’ve mentioned only some of the most common ones, but every application is different. That’s why one of the first steps in identifying cost reduction opportunities is identifying the top usage types as well as those AWS resources that consume them the most. Once you have that data, the next step is to see what can be changed either in your AWS architecture, AWS configuration or application code, in order to reduce relevant usage types.

Step 3 - Confirm the right balance of Performance, Availability and Operational Excellence.

Remember, cost is only one variable. At the end of the day you have to run reliable applications that will deliver a great customer experience and help your business grow. This means applications have to meet a number of requirements for Performance, Availability, Cost, Security and Operations.

That’s why cost optimizations shouldn’t impact other areas of your AWS architecture to the point that your applications will no longer meet your business requirements. Also, Security shouldn’t be on the table - only in very exceptional situations where an optional or unnecessary security feature makes up a high percentage of your AWS bill.

Here are some important steps to take, based on the top AWS usage types and resources by cost that you’ve already identified:

a) Identify affected applications, components and deployment stages by cost

Let’s say you’re paying considerable money for some expensive EC2 instances. The first step would be to identify the application(s) and the underlying components that are using those instances (i.e. web server, batch jobs, database, etc.). It’s also important to identify whether they belong to production or pre-production environments (i.e. development, QA, staging, etc.)

b) Identify main business transactions supported by identified applications and components

Once you identify the most expensive applications and components, it’s time to see how critical they are to your business and if the allocated AWS cost makes sense. The first step is to identify the affected business transactions. For example, once you’ve identified an expensive component, it’s time to see what user interactions it supports. For example: user login, users browsing through your content, logged in users managing their profiles, report generation, etc.

c) Identify Performance requirements for identified transactions and stages (dev, QA, production, etc.)

Once you identify the main affected business transactions by AWS cost, it’s time to think about Performance requirements. These requirements can be summarized in two areas:

- Throughput. How many transactions per unit of time your architecture needs to support. For example, 5 page loads per second, 100 API calls per second, 1 login per minute, etc.

- Response Time. How quickly should users get a response.

For example, generating an overnight report might not be as critical in terms of performance compared to users arriving on your landing page. User login might be a very high volume and critical part of your application, or it might be something that doesn’t happen often.

Answers to these questions will drive decisions such as EC2/RDS instance types and size (i.e. required vCPUs, RAM), disk allocation (i.e. IOPS), number of EC2 instances, etc.

d) Identify Availability requirements for identified transactions and stages

- What is the application and stage tolerance for downtime? For example, what happens if that particular component in a particular deployment stage goes down for: 1 minute, 5 minutes, 30 minutes, 1 hour?

- What components and/or processes do you need in order to comply with your availability requirements?

The answer to those questions will drive decisions for compute redundancy (i.e. multi-AZ RDS and EC2 deployments, number of EC2 instances), data backups (i.e. EBS/RDS snapshot creation and retention), data redundancy (i.e. RDS multi-AZ or global deployments), and automated recovery processes, among others. These are all areas that drive AWS cost.

Step 4 - Apply Corrective Actions

Now that you know where you’re spending money and what your applications need, it’s time to make some cost-cutting decisions. Here are some common areas to focus on:

Right-size and Optimize Infrastructure

If CloudWatch metrics show a degree of under-utilization for some of the top usage types by cost, then right-sizing should be an option to consider. Let’s say you have a c5.2xlarge EC2 instance with constant 10% CPU Utilization - it might be good to consider provisioning a smaller EC2 instance. The recommended steps would be the following:

- Find top usage types by cost and their associated AWS resources (EC2/RDS instances, S3 buckets, DynamoDB tables, etc.)

- Analyze CloudWatch metrics for the identified AWS resources. Make sure you analyze them for a long enough period of time to find potential usage spikes - a full month worth of metrics, for example.

- Make sure that usage for the analyzed period of time is consistent with expected traffic.

- If your application has seasonal spikes (i.e. Black Friday, marketing campaigns, etc.), then calculate how usage for those periods compares to the one analyzed. See if it’s possible to downsize your AWS components outside of those seasonal spikes and then increase capacity in preparation for those planned spikes.

- Determine the right capacity for identified components. For example, EC2 instance size or RDS DB instance size.

- Enable AWS Compute Optimizer, which is a tool that identifies optimal EC2 infrastructure, based on existing metrics and usage patterns.

Optimize Applications

Sometimes AWS cost issues are caused by applications that consume infrastructure resources in a sub-optimal way. Some common examples are heavy SQL statements or code components that result in a heavy CPU, memory or disk utilization. Here are some recommendations that you can apply to high cost AWS resources:

- Install an Application Performance Monitoring (APM) tool, such as NewRelic or DataDog. AWS X-Ray can also help identify application components that consume a high amount of compute time.

- Enable RDS Performance Insights. This tool can help you visualize heavy queries and processes affecting DB performance and therefore requiring a higher amount of compute capacity and costing more money.

- Use CloudFront, Elasticache or application-level components that help with caching, which can reduce the volume of transactions handled by backend components.

Delete, Terminate or Stop

A quick way to reduce cost is to identify idle resources, such as EC2/RDS instances, and terminate or stop them. As described earlier, CloudWatch Metrics are an essential tool in this step. Find those RDS instances with 0 connections for a certain period of time, or EC2 instances with < 1% CPU Utilization or a DynamoDB table with no read/write operations, etc. AWS Trusted Advisor has a section where it identifies potentially idle resources, which can help with this task as well.

Purchase Discounts

Once you’ve identified and applied optimizations at both the application and infrastructure level, it’s a good time to consider purchasing discounts. There are two main categories in this area:

- Reserved purchases. This includes the widely known Reserved Instances strategy, where you purchase an amount of compute capacity for either a 1-year or a 3-year period and get a discount ranging between 30% to about 70%, depending on the chosen terms. This applies to services such as EC2, RDS, EMR, Redshift, Elasticache and Elasticsearch. You can also purchase reserved read/write capacity for DynamoDB tables and save up to 80% compared to on demand pricing.

- AWS Savings Plans. Similar to Reserved instances, AWS Savings Plans deliver discounts to customers who commit to a 1-year or 3-year purchase. Savings Plans are only applicable to EC2 instances at the time, but they offer more flexibility compared to EC2 Reserved Instances, since they are not tied to a particular instance family, type, OS, region or tenancy. They can also be applied to ECS Fargate usage.

It’s very important that this step takes place after optimizations have been applied, otherwise you could end up spending money on long term commitments that could’ve been avoided by applying optimizations first.

Negotiate Discounts

If you incur in a certain amount of usage, there are areas where you can negotiate a better rate. For example, CloudFront offers a discounted rate if you commit to at least 10TB of Data Transfer per month. If you have some other service with a very high usage, reach out to an AWS Product Manager or Sales Manager for that service and ask for special pricing on certain usage types. Keep in mind that in most cases this only applies to usage types worth thousands of dollars.

Step 5 - Iterate

Reducing AWS cost is a continuous, iterative process. Sometimes it’s possible to get significant savings by applying relatively simple updates, but there are often cost areas that need to be monitored and refined constantly. That’s why it’s essential to approach cost savings with a long-term mindset and be aware that in many cases it’ll take at least 3-4 billing cycles to achieve an optimal AWS cost situation. After that, it’s very important to monitor cost on a regular basis and avoid any future “Bad AWS Billing Surprises”.

It typically looks like this:

- Find and fix the “Quick Wins”. These are items that have a good return and relatively low effort.

- Focus on long-term cost savings opportunities. These are areas that often result in infrastructure redesign, reconfiguration, testing or even rewriting some application components.

- Analyze AWS Cost and Usage Reports at least weekly, in order to validate cost reductions and find new opportunities.

- In each iteration, apply the 5 steps described in this article.

- Keep a mechanism in place to constantly monitor cost and avoid problems in the future.

To Summarize

Here are the 5 steps described in this article:

Do you need help lowering your AWS cost?

Don’t overspend on AWS. If you need help lowering your AWS bill, I can save you thousands of dollars (I’ve done it time and again). Click on the button below to schedule a free consultation or use the contact form.